Emo

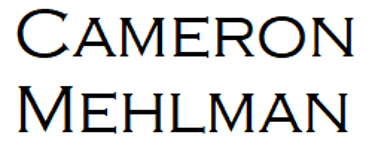

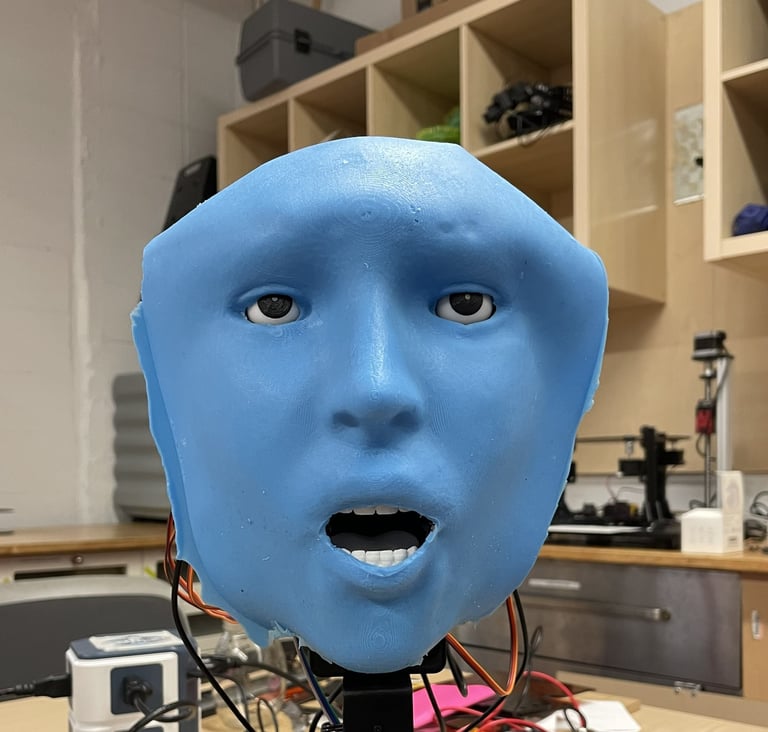

An animatronic human face

Emo is a project attempting to create an animatronic human face capable of mimicking speech as well as facial expressions. However, the human face is compact and capable of complex degrees of freedom, which, for this project, presents multiple hurdles in design as well as control. The robot consists of several modules (jaw and neck, mouth, eyes, and skin). The skin module attaches to the face via magnets, which are then manipulated by several systems of servo motors in order to replicate human-like movement.

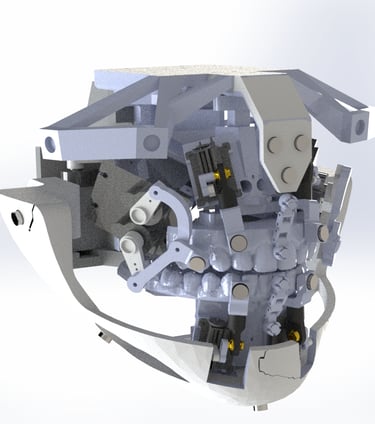

My first contribution to the project was to redesign the mouth and jaw modules. The new design includes 13 servos controlling 11 points of contact with the face skin and 17 degrees of freedom. This new design provides additional degrees of freedom to the jaw, as well as rotational deformation in the lips which was did not exist on earlier versions.

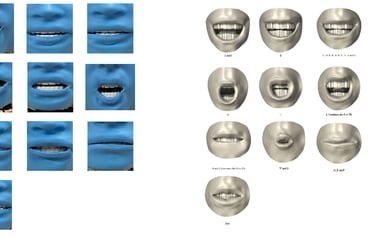

Beyond design, I also contributed to the control methods used, which realistically animate the robot's mouth to mimic human speech patterns. Our initial goal for this aspect of the project was to fit the robot's facial pose to a human's facial pose. We used facial recognition software available through the dlib library in python to compute key facial landmarks on a human's face from thumbnails of a video. Then, we calculated the position of each magnet in the mouth using the inverse kinematics and used a K-nearest neighbor algorithm to create a best fit. Unfortunately, this method did not show optimal results. Our second attempt involved manually fitting the robot's mouth pose to twelve different poses taken from the Preston

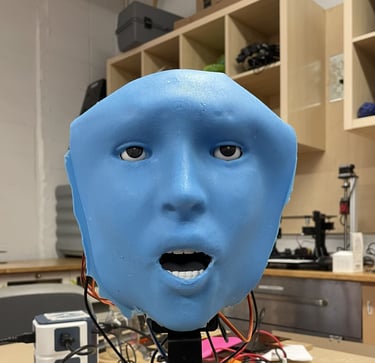

A CAD renderering of the updated mouth and jaw module design

Emo's replication of the Preston Blaire Phoneme Series against an animation

Blaire Phoneme Series, a series of mouth poses used by animators to mimic speech. The team then trained a classifier via supervised learning capable of identifying when to use each of the ten poses. This second method provided much more promising results.